Reprocessing Project: Messier 31 - The Andromeda Galaxy (6 hours in LHaRGB)

Date: November 3, 2024

Cosgrove’s Cosmos Catalog ➤#0135

Awarded Flickr “Explore Status on 11-3-24!

October 2022 - Named as “Picture of the Month” by the Webb Deepsky Society on their website!

(Click to enlarge)

Table of Contents Show (Click on lines to navigate)

About the Reprocessing Project

The weather has been overcast, and I am just starting my observatory build, so I was looking for something else to work on. Recently, some students from the University of Rochester asked if they could use the data from this image to help the group learn to use Pixinsight. I agreed with this, and it got me thinking.

I’ve always been proud of this image, probably because it was one of the first times I had folded successfully in Ha data. But it's been three years since I processed this, and since then, I now have access to many new tools and techniques that I did not have back then. Where could I go with this data?

The old project can be seen HERE.

I decided to give this a fresh look, and the results are the subject of this post.

About the Target (from the original Post)

Messier 31 is one of the brightest jewels in the night sky. This is my third attempt at this object. Rather than compose a brand new summary for this post, I will quote my description from a previous one:

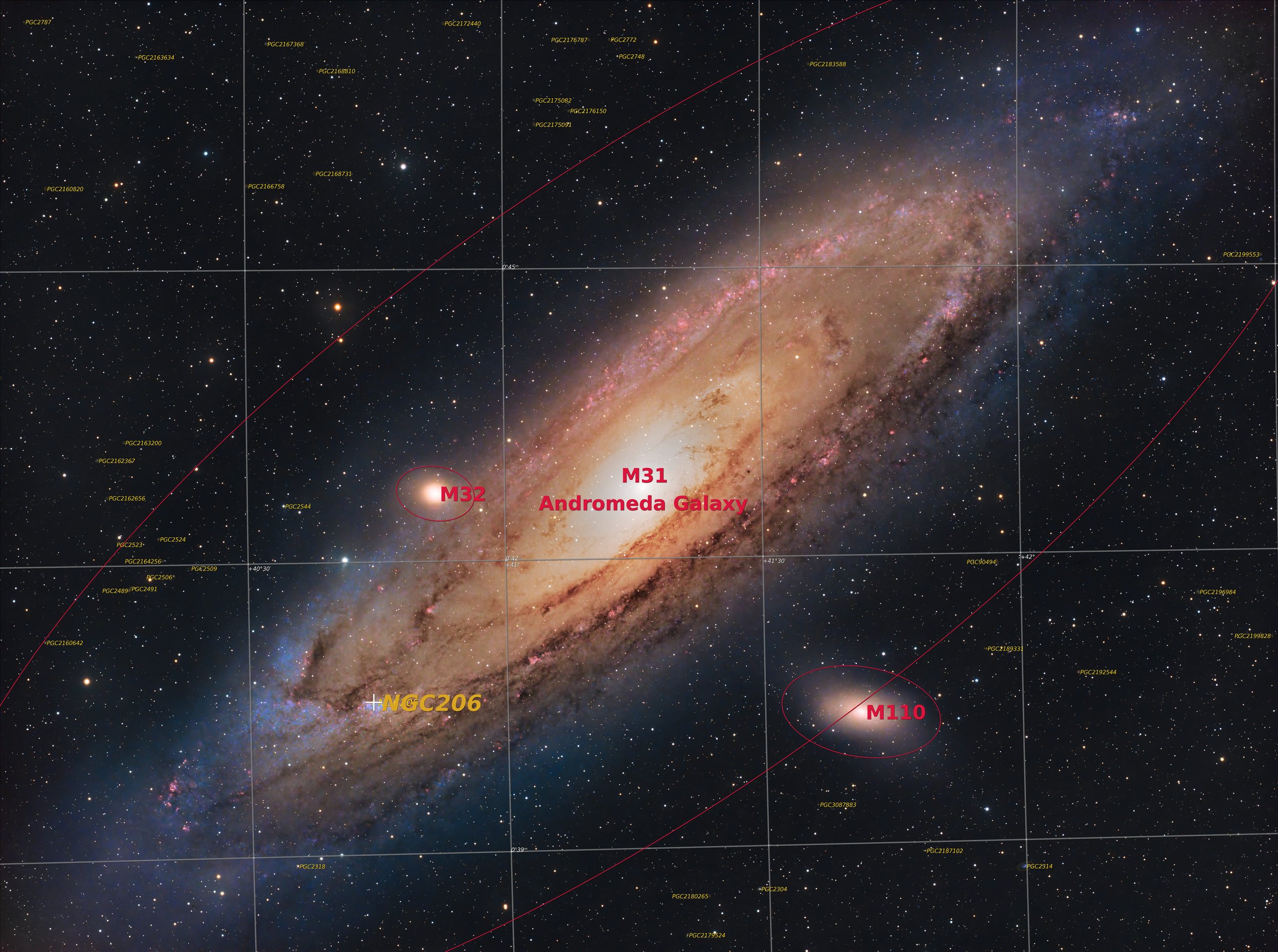

"Messier 31 is also known as NGC 224 and the Andromeda Galaxy, or as the Andromeda Nebula before we knew what galaxies were. It can be seen by the naked eye in the constellation Andromeda (how appropriate!) and is our closest galactic neighbor located 2.5 Million light-years away. It is estimated that it contains about one trillion stars - twice that of our own Milky Way.

M32 (at the top left closest to the core of M31) and M110 (at the bottom right)can be seen in the frame. These galaxies are neighbors and are, or have been, interacting with M31. M32 appears to have had a close encounter with M31 in the past and it is believed that M32 was once much larger and M31 stripped away some of that mass and triggered a period of extensive star formation in M32's core that we see the result of today. M110 is also currently interacting with M31 now.

As I shared with my first image of M31 taken about a year ago, it is projected that Our Milky Way and M31 will collide in the future, forming an elliptical galaxy. Don't sweat it - it won't happen for 4.5 billion years from now…"

Annotated Image

Created with Pixinsight ImageSolve and AnnotateImage Scripts.

Location in the Sky

Created with Pixinsight ImageSolve and FindChart scripts.

About the Project (from the original Post)

The Andromeda Galaxy is one of the most photographed objects in Astrophotography.

I have already shot it two times. Everybody who wishes to try astrophotography or claims to be an Astrophotographer has shot and posted images of this object.

Frankly - it is almost cliché.

So why bother? Why add to the enormous trove of already existing images?

Well - for one thing - it is very big and very bright.

It measures some 1 x 3 degrees. The full moon measures 0.5 degrees, so M31 is 2 moons by 6 moons in size. This surprises most people.

It has an apparent magnitude of 3.5 - is one of the farthest objects that can be seen with the naked eye.

When you are talking about a distance of 2.5 Million light-years - you are dealing with scales that your mind has trouble comprehending. When you see M31 with your naked eye, think of it - you are responding to a sensation caused by photons that had traveled for 2.5 million years before they landed on your retina!

Short and crude exposures will show amazing details - the bright core, the spiral arms, companion galaxies, dust lanes, knots, and twists of matter. M31 is a feast for the eyes.

So why make yet another image? What not? It's simply an amazing target with many opportunities to put your stamp on it and see what you can do!

When I first started, I was getting some early results - and more than a little impressed with myself. Of course, I was probably the only person in the world who was impressed with what I had done. Then I took a short integration of M31 and did some simple processing - the results stunned me - it was just so impressive compared to my earlier images.

As I showed the image around to family and friends, they seemed impressed as well! “Wow! Did you take this?" While that kind of response was a little insulting, I was still very glad to finally be evoking in others some kind of positive reaction.

Previous Attempts

That first attempt with M31 can be seen HERE. My second attempt can be seen HERE.

I'll include the images below:

My first attempt at M31 - centered on the core of the galaxy - Sept 2019 (click to enlarge)

My second attempt Aug 2020 - this time I attempted to include the companion galaxies, M32 and M110. (Click to enlarge)

Both of these images were taken with my William Optics FLT 132MM APO Refractor with a focal length of 920mm. With this scope, I could not get the whole galaxy in the frame!

The William Optics 132mm FLT APO platform took my first two images of M31.

The Askar FRA400 Platform was used to shoot the new M31 image.

At the time, an OSC (One-Shot-Color) camera was used. It did a good job but did not have the flexibility of a mono camera with wideband and narrowband filters.

Now that I am getting good results with my wide field-portable FRA400 scope, I finally had the ability to get the whole galaxy in the field of view for the very first time - and I could also shoot with a mono camera.

My plan was to collect LRGB filter data along with some Ha subs. The Ha filter emphasizes areas of HII activity - typically associated with new star formation - and folds that into the image of the galaxy. When I did this with other galaxies, I was impressed with the drama it seemed to bring to the image. Now, with a large and detailed object like M31, it could be the icing on the cake.

So why shoot it again?

To see if I could better my earlier efforts.

To finally get a chance to get the whole galaxy into the image

To see what I could do by adding Ha data into the mix

To use more advanced processing techniques on it

To visit an old friend…

Data Capture (from the original Post)

Between November 5th and 8th, we had a surprisingly good stretch of clear skies without the moon. As I have noted in my recent image project posts - this is a wonderful time of year if you have some decent weather since it gets dark early, and you can have as much as 12 hours of capture time each night. I still have to wait for my targets to clear the tree line - and I can only collect subs for a fairly short time before it again descends back into the trees. But I can capture targets one after another all night long!

Ultimately, I ended up with slightly over 6 hours of data. I had LRGB subs that were shot at 90 seconds each. I often shoot RGB subs with 120 seconds, but since the FRA400 astrograph is a faster f/5.5 optical system (compared to my other scopes, which are f/7 and F/8.35, respectively), I opted for a shorter time.

Processing - Old Data and New Methods

So - what is different this time around?

First, I am using a new WBPP version with more refined capabilities.

Second, I am using the suite of AI tools from RC-Astro, which has revolutionized how I do deconvolution and noise handling. It also allows me to do starless processing! In the past, I was very concerned about negatively impacting my stars when doing the aggressive stretches needed to pull out detail in faint, nebulous regions. Now I can process the stars and the nebulae and dust.

I also have three more years of processing under my belt - I think this makes a big difference - but often one that is hard for me to quantify.

The first time around, I blended in the Ha contribution very early on in the linear domain, using the SHO-AIP Script. The idea here was that Ha should be part of the red signal, so fold that in early and carry it through the processing chain.

This time around, I decided to change the approach here. Instead, I plan on:

Processing the RGB, L, and Ha images separately until I get to the Nonlinear State.

I will then fold in the L image, creating the traditional LRGB image.

Then, I will add the Ha image to augment the LRGB.

In my original processing, I seemed to have an imbalance in the warm colors across the galaxy. With this processing effort, I saw no such problem and did not have to deal with it. It must have been a unique issue based on how I had processed things the first time!

So, this was my basic approach. In the end, I ran into two issues that I seem to deal with often these days:

How saturated the colors should be

How much do I want to reduce the stars?

I tend to be a “High-Color” guy, and as such, I often go too far. Same problem here again. My first version of the image had very strong orange saturations.

I have been fighting star bloom since I first started Astrophtiography, and now, with our more powerful AI tools, I can tend to go too far, reducing the size of the final stars too much.

I often share early versions of my images with my local AP friends, and when I did this, they gave me feedback that the orange saturations were too strong and the stars too far reduced. So, I backed off on both of those areas.

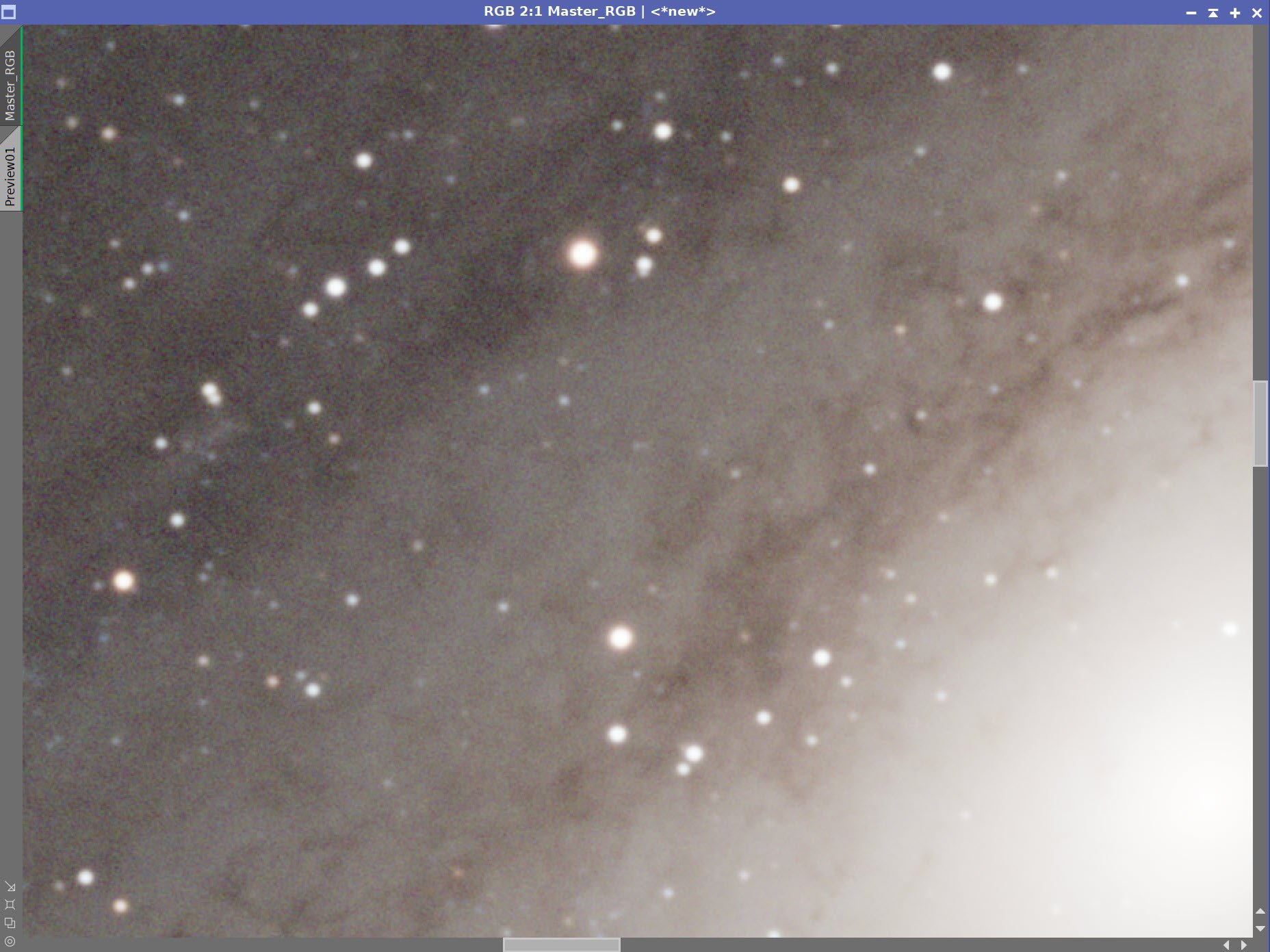

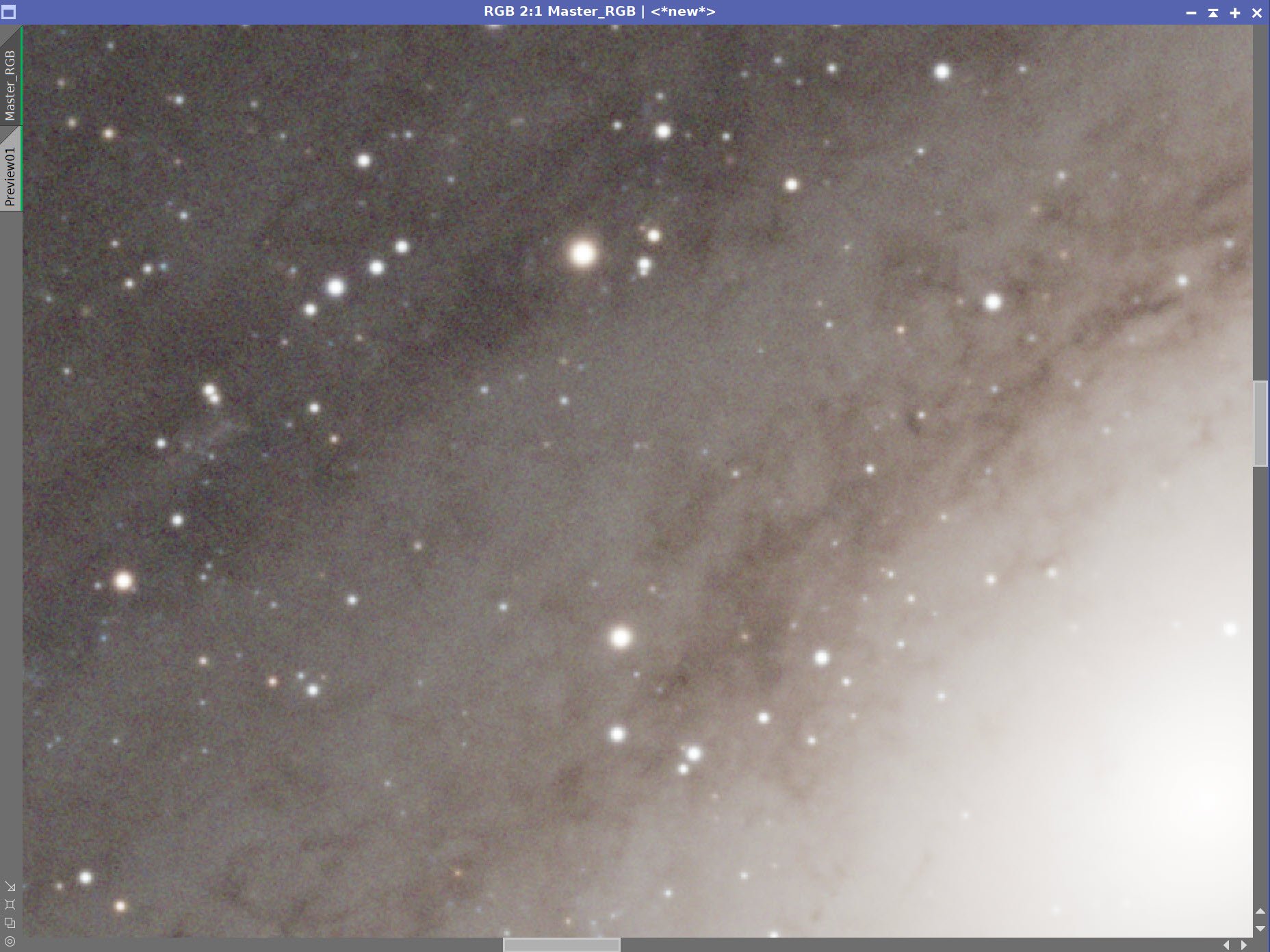

To give you a feel of how this played out, here is my first image and my final image for comparison.

My initial version - too much orange, and too little stars. (click to enlarge)

My final version with larger stars and less orange saturations (click to enlarge)

Results

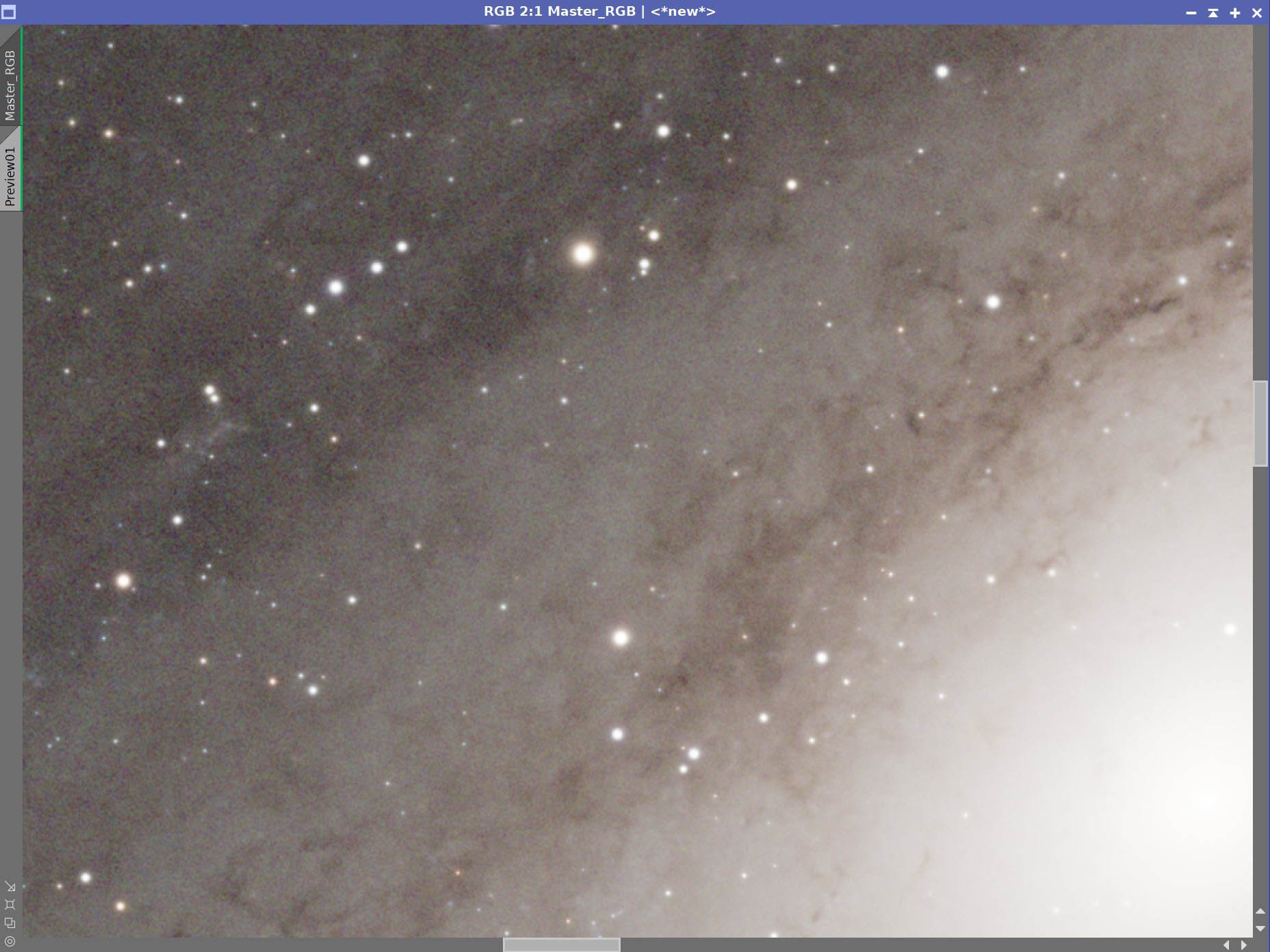

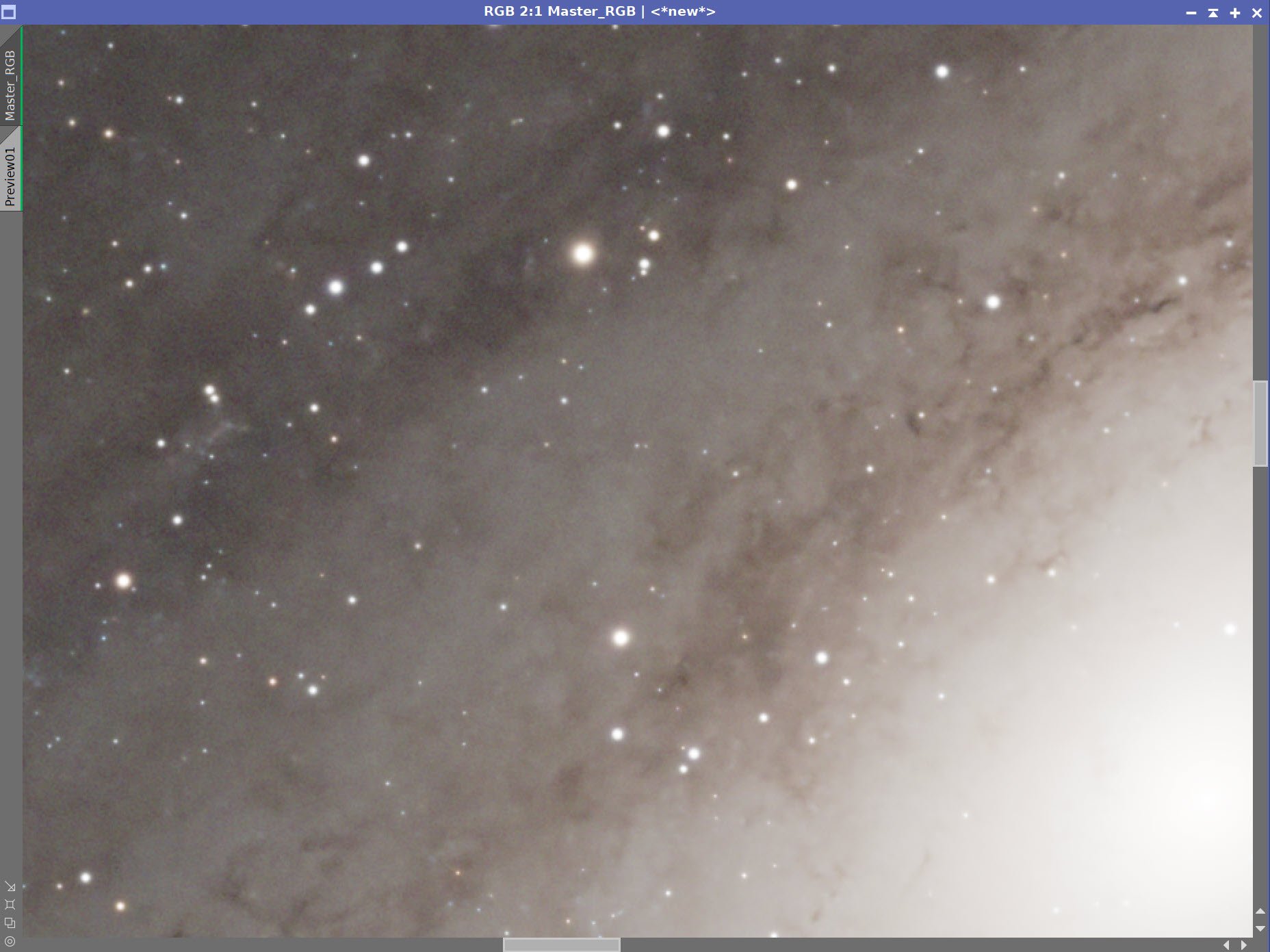

I have to say that I am very pleased with how the final image came out. I love the detail in the outer fringes of the galaxy that I was able to bring out and the color as well.

My initial image version had red color balance on the right side and a blue balance on the left (click to enlarge)

The final version just seems so much richer to me (click to enlarge)

More Info

Wikipedia: Messier 31

NASA: Take a "Swift" Tour of the Andromeda Galaxy

Carnegie Science: Hubble's Famous M31 VAR! plate

The SkyLive: Messier 31

Capture Details

Lights

Number of frames is after bad or questionable frames were culled.

48 x 90 seconds, bin 1x1 @ -15C, unity gain, ZWO Gen II L Filter

47 x 90 seconds, bin 1x1 @ -15C, 0 gain, ZWO Gen II R Filter

40 x 90 seconds, bin 1x1 @ -15C, unity gain, ZWO Gen II G Filter

37 x 90 seconds, bin 1x1 @ -15C, unity gain, ZWO Gen II B Filter

21 x 300 seconds, bin 1x1 @ -15C, unity gain, Astronomiks 6nm Ha Filter

Total of 6 hours 3 minutes

Cal Frames

25 Darks at 300 seconds, bin 1x1, -15C, gain 0

50 Darks at 90 seconds, bin 1x1, -15C, gain 0

25 Dark Flats at each Flat exposure times, bin 1x1, -15C, gain 0

15 R Flats

15 G Flats

15 B Flats

15 L Flats

15 Ha Flats

Capture Hardware:

Click below to see the Telescope Platform version used for this image

Scope: Askar FRA400 73MM F/5 Quintuplet Astrograph

Guide Scope: William Optics 50mm

Camera: ZWO ASI1600mm-pro with ZWO Filter wheel with ZWO LRGB filter set, and Astronomiks 6nm Narrowband filter set

Guide Camera: ZWO ASI290Mini

Focus Motor: Pegasus ZWO EAF 5V

Mount: Ioptron CEM 26

Polar Alignment: Ipolar camera

Software:

Capture Software: PHD2 Guider, Sequence Generator Pro controller

Image Processing: Pixinsight, Photoshop - assisted by Coffee, extensive processing indecision and second-guessing, editor regret and much swearing…..

Image Processing Detail (Note this is all mostly based on Pixinsight)

1. Assess all captures with Blink

Light images

Lum images - 4 images rejected due to heavy clouds

Red images - some very light gradients seen - but none removed

Green Images - some very minor gradients seen - but none removed

Blue Images - all frames look great!

Ha Images - clouds moved through - 5 images removed

Flat Frames - look great!

Flat Darks

Taken from IC1805 project. Greens are missing but have the same exposure time as red, so we can use those.

Darks - taken from the IC1805 project

2. WBPP Script

WBPP version 2.7.8

Load all files

Dark exp tolerance set to zero

Light exp tolerance set to zero

Pedestal auto for all frames

CC auto for all groups

Select max quality

Ref image set to auto

Select target folder

select Drizzle Processing at 2x

Executed in 43 minutes - no error in 1:07:54

WBPP setup.

Post Processing View of WBPP

WBPP Pipeline view

3. Import Master images and Rename

Master images. Top: Lum and Ha. Bottom: Red, Green, and Blue.

4. Process the Lum Image

Remove any gradients with DBE. Choose a sample pattern that misses the body of the galaxy. Use subtraction for the fix method. See below.

Get an idea of star sizes by running PFSImage: the resulting star sizes are FWHM X = 4.6, FWHM X= 4.46

Run BXT “correct only.” This cleans up stars at the edge of the field. There's not really a lot to fix there.

Experiment with BXT. Find the best size for nonstellar corrections. In this case, I felt I could go a lot further than the PSFImaes would suggest - I ran it by almost 2x more.

Run BXT in full mode. See the screenshot of the tool panel for the parameters used.

Run NXT at 0.5 to “knock the fizz off.”

Run STX to go starless. We will end up using the starless image only, so I will eliminate the stars-only version.

DBE Sample pattern for Lum image. (click to enlarge)

Lum image before DBE. (click to enlarge)

Lum image after DBE. (click to enlarge)

Background image subtracted. (click to enlarge)

PFSImage stats for the Lum image

BXT Settings used.

Lum Image Before BXT, After BXT COrrect Only, After BXT Full COrrection, After NXT=0.5

Lum image before Star Removal (click to enlarge)

Lum Starless image (Click to enlarge)

Lumstars only. (click to enlarge)

5. Process the Linear RGB Image

Create the initial RGB color image using the ChannelCombination tool

Run DBE with subtraction and the same sampling pattern as used with the Lum image. This will also remove the weird color bias.

Run SPCC, using the setup shown in the panel snap below.

Run PFSImage and compare it with Lum - they are pretty similar. FWH X= 4.54, FWH Y = 4.26

Run BXT Correct only.

Run BXT full, use the same parameters as used on Lum (see panel snap below)

Run NXT with a level of 0.5

Use STX to extract the stars. These stars WILL be used!

The Initial RGB image.

RGB image DEB Sampling Pattern (click to enlarge)

RGB image before DBE (click to enlarge)

RGB image after DBE (click to enlarge)

RGB background imae subtracted. (click to enlarge)

The SPCC Panel as setup and used.

The regression result obtained.

RGB after SPCC.

RGB Before BXT, After BXT COrrect Only, After BXT Full COrrection, After NXT=0.5

RGB before Star Removal. (click to enlarge)

RGB Starless Image (click to enlarge)

RGB Stars only (Click to enlarge)

6. Process the Ha Image

Run DBE using subtraction and the same sampling plan as with L and RGB

Run BXT Correct only

Run Full BXT using the same settings as with the other image

Run NXT = 0.55

Subtract stars preserving the RGB stars

Ha sampling Plan for DBE (click to enlarge)

Ha before DBE (click to enlarge)

Ha after DBE (click to enlarge)

Ha DBE Backgrond removed (click to enlarge)

Ha Image Before BXT, After BXT COrrect Only, After BXT Full COrrection, After NXT=0.5

Master Ha image before star extraction (click to enlarge)

Master Ha starless (click to enlarge)

7. Take the Lum Image Nonlinear and Process

Go nonlinear using the STF->HT Method

Use CT to adjust the contrast using an S-shaped curve.

Using the GAME Script, create a gradient mask that covers the Galaxy

Apply the mask

Run HDR_MT with levels = 10 and the Galaxy Mask in place. This will pul some more detail out of the bright region of the core.

Run LHE with a radius of 300, a contrast level of 2.0, an amount of 0.2, and a 10-bit histogram - with the Galaxy Mask in place

Remove the mask

Run NXT = 0.5

The initial nonlinear Lum image. (click to enlarge)

Boost the contrast with CT using an ‘S’ Curve. (click to enlarge)

The curve adjustment used .

The Galaxy Mask

MDR_MT applied with the Galaxy mask so only the galaxy is impacted. (click to enlarge)

After NXT = 0.5 (click to enlarge)

Apply LHE with the parameters specified with the galaxy mask applied(click to enlarge)

After a final CT adjustment. (click to enlarge)

8. Process the RGB and LRGB Images

The RGB linear starless image nonlinear using the STF->HT method

Apply CT to adjust tone and contrast. In preparation for inserting the L image, it is best to make the color a little dark and strong.

Use ImageBlend script in the color mode to determine the best way to mix in L signal. See the Panel Snap showing where I ended up

Apply a series of CT adjusts to fine tune the LRGB position.

The initial RGB nonlinear image. (click to enlarge)

After CT adjust (click to enlarge)

The ImageBlend script panel used to combine the L and RGB images.

The resulting LRGB Image (click to enlarge)

Final LRGB image after CT adjust (click to enlarge)

9. Process the Nonlinear Ha Starless image and Create the Initial HaLRGB Image

Convert the Master Ha Starless image to nonlinear space using the STF->HT method. This is simple and nice to use, and since we no longer have to worry about preserving stars - it works well here.

Use CT to drop out the background and focus on key Ha features.

Use the Toolbox Script CombineHaWithRGB to fold the Ha data in and create the initial HaLRGB image. This is my first use of this tool, and I found that I liked using it and the results it provided.

Use CT to lighten outer portions of the galaxy.

Run SCNR in green with 0.40 to deal with some slight green noise seen when viewing close-up.

Let’s deal with the super strong orange saturation.

Create an orange color mask.

Use Color_Mask_Mod to create a mask with starting and ending hues of 360-61

Boost contrast with CT

Now, blur the mask. I used BillBlanshan’s MaskBlur script. I ran it several times.

Apply the Mask

Use CT to reduce the orange saturation

Remove the mask

I really want to bring out the outer extent of the galaxy, so run ExponenitalTransform to do this. I used PIP, an order of 0.5, and the lightness mask

Use CT to adjust the result.

Run NXT at 0.6 to reduce the noise.

I am seeing some residual magenta gradients in the extreme ends of the galaxy. DBE must have missed this, and it has become more obvious with all of the stretching. - especially after I applied the ExponentialTransform.

Using GAME, create an EdgeMask where the magenta is seen.

apply the mask

Use CT to desaturate these areas.

The initial nonlinear Ha image (Click to enlarge)

Tonescale adjust with CT to drop out backlground. (Click to enlarge)

Here is the panel from the script used to fold in the Ha data and the parameters used after some playing around.

Initial HaLRGB image (click to enlarge)

After CT Adjust (click to enlarge)

Creating the OrangeMask

Using ColorMask_Mod to create the Orange Mask (click to enlarge)

CT contrast boost (click to enlarge)

The intial OrangeMask (click to enlarge)

After burring. (click to enlarge)

CT sat adust with the Orange Mask in place (click to enlarge)

Adjusting the tonescale from the ExponentialTransform Operation (click to enlarge)

After using ExponentialTransform to lighten the faint areas. (click to enlarge)

Run NXT at 0.6 to clean up the noise a bit. (click to enlarge)

CT to darken center.

The EdgeMask created with GAME.

The Final HaLRGB image. (click to enlarge)

10. Process the RGB Stars

Take the linear RGB stars to Nonlinear with HT and adjust them by eye until the images look pretty good.

Use CT to adjust the tone scale and color saturation to create the final look for the image. Create three levels of stars so I can decide which looks better: Small, medium, and large.

Choose the medium level of stars for the final image. The large was too much, but the medium level showed the stars nicely and had good color.

The initial RGB nonlinear star image.

Smallest Stars version.

Medium Sized Stars.

Large Stars version.

11. Add the Stars Back In and Complete Processing

Use the StarScreen script to add the stars back in.

Rotate the image 180 degrees to get the best view

Run a final denoise with NXT = 64

Run Darkenhance to bring out the dust lanes a bit

The Final Starless Image. (click to enlarge)

The Final RGB Stars image. (click to enlarge)

The ScreenStars script combines the stars and starless images.

Initial Starred image! (click to enlarge)

Rotate the image 180 degrees. (click to enlarge)

Before and After the Final NXT

After DarkStructureEnhance.

112. Move to PhotoShop and do the final Polish

Export as 16-bit tiff

Open in Photoshop

Use the Camera Raw filter to tweak color, tone, and Clarity

Use Camera Raw ColorMixer to adjust the blues and orange tones

Select the outer clouds and boost blue sat

Add watermarks

Export various versions of the image

The Result!

The portable scope platform is supposed to be, well, portable. That means light and compact. In determining how to pack this platform for travel, I realized that the finder scope mounting rings made no sense in this application and I changed them out with something both more rigid and compact - the William Optics 50mm base-slide ring set.